Here’s another update for the js web framework benchmark. This time the benchmark has seen lots of contributions:

- Dominic Gannaway updated and optimized inferno

- Boris Kaul added the kivi framework

- Chris Reeves contributed the edge version of ractive

- Michel Weststrate updated react-mobX

- Gianluca Guarini updated the riot benchmark

- Gyandeep Singh added mithril 1.0-alpha

- Leon Sorokin contributed domvm

- Boris Letocha added bobril

- Jeremy Danyow rewrote the aurelia benchmark

- Filatov Dmitry updated the vidom benchmark

- Dan Abramov, Mark Struck, Baptiste Augrain and many more…

Thanks to all of you for contributing!

This suddenly turned the project from something where I tried to write the benchmarks with some healthy smattering of knowledge to a quite well reviewed and tuned comparison. It was especially interesting to see that some frameworks authors took notice of the benchmarks and improved the benchmarks (and sometimes even the frameworks) or added development versions to the comparison. Especially for some of the slower frameworks it shows nice improvements.

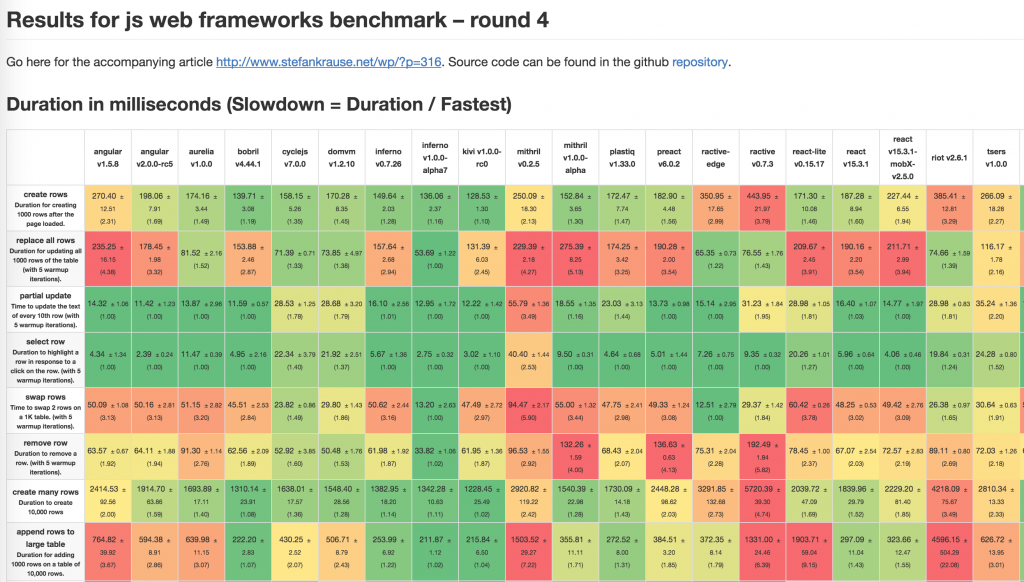

Here’s the updated result table (on my MacBook Pro, Chrome 53):

Important results

(Disclaimer: The benchmarks computes the geometric mean of the ratio between the durations of the benchmarks of the framework to the duration of the fastest framework for that benchmark. A factor X indicates that a frameworks takes on average X times as long as the fastest version (which is usually vanillajs). In the discussion below I’ll talk about the percentage that a framework is slower than vanillajs. Angular 2 has a factor of 1.82 and thus I’ll say it’s 82% behind or slower than vanillajs.)

- Inferno is crazy fast. It’s only 7% slower and I had to add some tricks for vanillajs to match inferno. Especially the remove row benchmark needed a modification. Simply removing the row from the dom causes a long style recalculation. This can be avoided by shifting the data of all rows below the one that should be deleted one up and then deleting the last row.

Inferno also shines by showing virtually no worst case scenario. - Kivi is second fastest, only 32% percent behind vanillajs.

- vue 2-beta1 is amazing. It’s only 37% slower than vanillajs. This is a large improvement from vue 1, which is 116% behind vanillajs. The best thing about this improvement is that the application code stayed unchanged (take that angular!).

- bobril and domvm are quite quick and are only 40% or 63% behind vanillajs.

- The aurelia benchmark got a rewrite and it’s pretty good now – though I doubt I’ll like it’s way of (not) dirty checking. It’s now only 66% behind, a bit faster than both react and angular 2. Last time it was 120% behind vanillajs.

- Riot is new in this round, but it doesn’t perform well in this benchmark. On average it takes three times as long as vanillajs.

- React v15.2 was 140% behind. It improved to 82% due to an optimization in react that makes the clear rows benchmark much faster.

- Ractive is currently of one the slowest framework being 260% behind. It shows an impressive improvement with 87% for the edge version.

- Same goes for mithril which improves from being 190% slower to 83%.

- Cycle.js 7, plastiq and vidom are pretty much unchanged still performing very well.

It’s very impressive to see how well those frameworks can perform and how much improvement is possible.

The test driver was rewritten in typescript as a replacement for the java version. A goal was to reduce duration for running the benchmarks which meant to remove all Thread.sleep workarounds. Running the benchmarks e.g. for angular 2 takes now 3:40 minutes instead of 10:48. The new test runner also supports running selective frameworks (e.g. npm run selenium — args –framework angular-v2) and/or benchmarks (npm run selenium — args –benchmark 01_).

The test runner measures the duration for all frameworks according to the chrome timeline including repaint. If you want to try a simplified metric in the browser: All frameworks try to log the duration on the console from event start until repainting finished. This appears to be close to the figures extracted from the timeline. If there’s a difference you better trust the timeline.

I think that you forgot something really important that I’ve tried to explain in this issue https://github.com/krausest/js-framework-benchmark/issues/22

Vanilla, domvm, vue2, Inferno1.0 and everyone who has “swap rows”/”remove row”/etc below 40 ms just doesn’t use “track-by” algorithms.

Almost all other libraries will see a huge improvements in test cases “replace rows”/”swap rows”/”remove row” just by disabling “track-by” algorithms, is it hard to understand? What is the point to compare implementation that doesn’t use “track-by” algo with implementations that properly rearrange nodes. And it doesn’t mean that “track-by” algo makes everything slower, it just means that test cases that you are using in this benchmark are all biased toward implementations with “track-by” algo.

Vanilla even has this weird implementation for remove row test case https://github.com/krausest/js-framework-benchmark/blob/26367bcbe364ef6a6cde7cf0400009e617805efb/vanillajs/src/Main.js#L235 :)

typo: test cases that you are using in this benchmark are all biased toward implementations with **disabled** “track-by” algo.

>vue 2-beta1 is amazing. It’s only 37% slower than vanillajs. This is a large improvement from vue 1, which is 116% behind vanillajs. The best thing about this improvement is that the application code stayed unchanged (take that angular!).

https://github.com/krausest/js-framework-benchmark/blob/415e6d2873642e42d4f3896e939eccdf60305357/vue-v1.0.26/index.html#L43

https://github.com/krausest/js-framework-benchmark/blob/415e6d2873642e42d4f3896e939eccdf60305357/vue-v2.0.0-beta1/index.html#L43

You got me. After drafting the blog post, the inferno update came along and I had to improve vanillajs. Comparing with vuejs I noticed that vue 2 had the track-by in the resulting html and thus removed it. Anyway the differences in code between angular 1 and angular 2 are a bit bigger,

> Aurelia – Though I doubt I’ll like it’s way of (not) dirty checking

Shouldn’t knock it if you haven’t tried it, not much different than how anyone handles it right? ;)

I’m not sure I understand the comment regarding Aurelia and “(not) dirty checking.” Aurelia does not use dirty checking when working with class properties. It implements an observable (some would say “reactive”) strategy for being notified of changes. The Aurelia application as implemented for this benchmark uses no dirty checking at all.

That’s surely a matter of taste but I’m not enthused that e.g. for swapping rows it’s not enough to just swap the references in the array.

Somebody should add Monkberry.

I’m up for it! Just send me a PR ;-)

How about adding a note about the framework size and maybe dependencies?

Rendering performance is one thing, but perf per kb may be interesting as well. I know that will not be a hard fact because most frameworks are modular.

My boss asked back in round 3 why BackBoneJS is not represented. IIRC, I may have explained to him that BackBone is somewhat incomplete (without binding) and adding that for a benchmark here would be a subjective excercise… or did I just think that?

I’m no longer up-to-date with backbone, but as far as I remember in the old days you used backbone with a template engine and just rerendered the whole list. Angular and all the virtual dom frameworks changed that. I’d imagine that the performance of backbone + e.g. mustache would be very poor if done by rerendering the whole list. Personally I’m not interested in writing a benchmark for that but if someone sends me a pull request I’ll add it.

could you please add knockout too

Will be included in the next round.

I think backbone and backhone+handlebarjs should also be added to the tests

What about ember.js, why it is missing? It’s rather popular framework so it would be nice how it behaves in comparison to other frameworks?

I second including Ember.js. It was here in the previous round, but now it’s gone? It’s one of the most used JavaScript frameworks out there, so it would be great if it would be included in the next round, especially considering their new rendering engine, Glimmer 2 is closed to being released.

I too would very much like to see the return of Ember to this table. My top 3 preferred are Ember, Inferno and Vue, and it was great to see some Vue 2 numbers added.

I’m assuming Ember was dropped for some other reason. (Like you wanted to postpone including it until you could include at least initial Glimmer 2 performance results?)

The table is really quite complete (at least for most people) with it, but really pretty incomplete without. To me that’s like leaving out React, or Angular, or both Vue and Inferno. We really need to see that included again!

But thanks for this outstanding effort. This is a *very* useful thing you do here. Much appreciated.

Ember will be included in the next round again. (It’s already in the repo).

I was just too afraid that it was my fault that ember performs slow.

What about Polymer ?

The result showing React to be one of the slowest framework is puzzling. I am no UI expert but, just started looking out to pick one for learning. Until now, all i read about React was boasting about its performance and this is the 1st and only article (so far) that downplays react with its performance. Doesn’t the Virtual DOM and other aspects help to deliver high performance ?

>>> React v15.2 was 140% behind. It improved to 82% due to an optimization in react that makes the clear rows benchmark much faster.

Ractive is currently of one the slowest framework being 260% behind. It shows an impressive improvement with 87% for the edge version. <<<

I just saw this today and I think it’s awesome!

Will you be accounting for the issue that localvoid pointed out regarding the benchmark tests in the next round?

You forget to add Snabbdom to the tests.

Can we have Elm (http://elm-lang.org) in the next round?

It would be great to see choo as well! It’s supposedly very fast, and very easy to use: https://github.com/yoshuawuyts/choo

I second the request for Elm.

+1 for elm :-) hope you can mske it happen.

Time for the next round, isn’t it? :)

Is inferno ready for production ? I don’t see many people promoting it, or not able to find apps using it in production. I am currently planning to start a pwa inferno app given its beating every other framework out there in performance, but could not gather enough support around it.