It’s been a few months since I published Round 2 of my javascript web frameworks performance comparison. In Javascript land months translate to years in other ecosystems so it’s more than justified to introduce round 3.

Here’s what’s new:

- Added a pure javascript version to have a baseline for the benchmarks (“vanillajs”).

- Added cycle.js v6 and v7. What a difference the new version makes!

- Added inferno.js. This small framework made writing a faster vanillajs version challenging.

- Updated all frameworks to their current version.

- New benchmarks: Append 10,000 rows, Remove all Rows, Swap two rows.

- Added two benchmarks that measure memory consumption directly after loading the page and when 1,000 rows are added to the table.

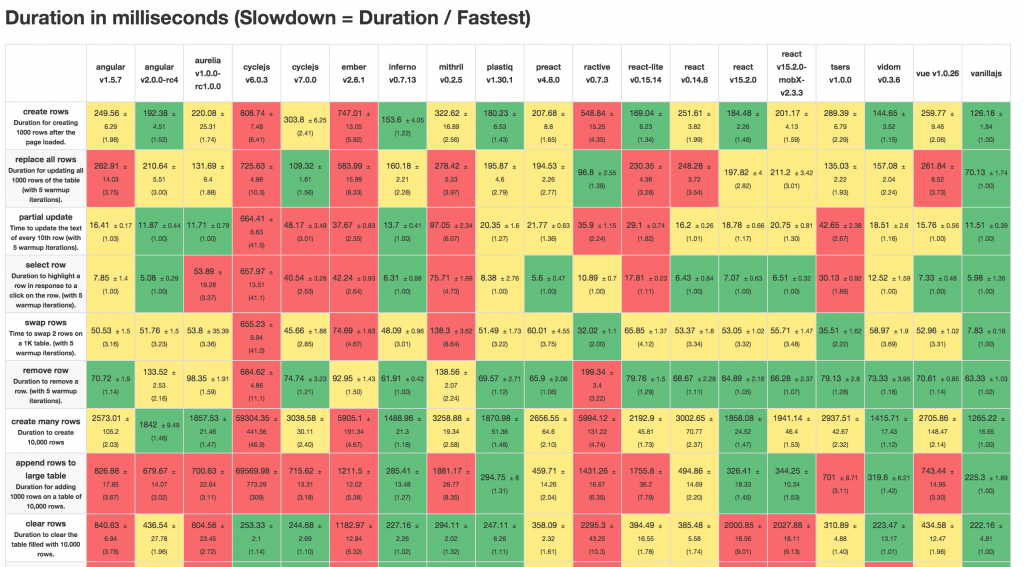

The result table for chrome 51 on my MacBook Pro 15 reveals some surprising results (click to enlarge):

(Update: cycle.js v7 was reported with an average slowdown of 2, but I forgot a logging statement. Without it it improved further to a factor of 1,8. The table has been updated.)

- Vanillajs is fastest, but not by much and that only by hard work.

- Inferno.js is an incredibly fast framework and only 1.3 times slower than vanillajs, which is simply amazing.

- plastiq comes in as the second fastest framework being just 1.5 times slower, followed by vidom.

- React.js appears to have a major performance regression for the clear rows benchmarks. (Clearing all rows for the third time is much faster. There are two benchmarks pointing to that regression which is a bit unfair for react.js)

- Cycle.js v6 was slowest by far, v7 changes that completely and leaves the group of the slow frameworks consisting of ember, mithril and ractive.

All source code can be found on my github repository. Contributions are always welcome (ember and aurelia are looking for some loving care, but I to admin that I have no feelings for them. And I’d like to see a version of cycle.js with isolates) .

Thanks a lot to Baptiste Augrain for contributing additional tests and frameworks!

I’d be interested in seeing the results upcoming Mithril 1.0 ( https://github.com/lhorie/mithril.js/tree/rewrite ), which has a new engine that is significantly faster than v0.2.5 on dbmonster and vdom benchmark

Truthfully, v0.2.5 hasn’t gotten much attention in terms of performance for a while, since I’m focusing primarily on the v1.0 engine.

very good idea to add memory figures.

Beware that there’s a garbage collection, this may compromise the results.

I hope we do. We’re running chrome with –js-flags=–expose-gc and are calling window.gc() when measuring and extract the reported usedHeapSizeAfter from the timeline after the gc happened.

It would be interesting if you used Vue.js 2.0

Any chance of testing domvm [1] in round 4? It won’t break any records, but should land somewhere in plastiq’s territory (hopefuly ;)

[1] http://leeoniya.github.io/domvm/test/bench/dbmonster/

Also, in the ractive version, I see lots of calls to startMeasure() & stopMeasure(), whoich I don’t see on some others (like Vue).

I suppose I missed the proper code elsewhere?!

Ok, I went over the code to understand it, it’s just that vue.js miss the code that measures & displays marks on the console.

Hi, thanks for this good work. I would be interested by Vue 2 and Monkberry.

http://monkberry.js.org/

Hi, author of Bobril here. Would you accept push. Request for my fw?

Monkberry seems to be a ractive clone.

You could maybe duplicate the ractive folder direclty ;-)

@Boris: Of course! I’m looking forward your pull request.

Hey Stefan,

Your code is utilizing Dirty Checking in the Aurelia code (see the getter here: https://github.com/krausest/js-framework-benchmark/blob/master/aurelia-v1.0.0-rc1.0.0/src/app.js#L100)! This means the performance is probably quite a bit poorer than it would be in a real life, properly written scenario. Our team is working on a pull request to your test code right now.

Awesome work otherwise, cheers!

What is the criterion for green vs yellow? Just curious as it seems some rows have more green so I’m assuming there is a calc somewhere and not just randomly assigning colors.

Please would you include Knockout.JS in your next benchmark? I have just started using it as it makes enhancing existing traditional web applications very easy. But boy does it seem slow in comparison to what I’ve seen of React and Angular.

Which would be a shame as it’s still got quite a footing (e.g. the Azure cloud console I understand is KnockoutJS)

This is hard to digest.. red yellow green according to what?

are there bar graphs available?

It would be nice, if the “production” payload size was included for comparison, in addition to the memory footprint. I’ve been using Preact for a few things as the payload size is very small, even though it doesn’t always perform as well.

Given the additional performance of Inferno, this could make an informed decision easier, as the memory footprint is similar.

Basically green is used for values that are less than 33% over the second best and red if they are more than 133% over it. Then there are some adjustments for corner cases. You may take a look at webdriver-java/makeTable.js if you want to see all the details.

Did the pull request done by the Aurelia team change any of its values?

RiotJS?

I’d like to see Choo (A really lightweight framework) https://github.com/yoshuawuyts/choo and Vue 2.0

Hi,

You can add the Bobril framework.

Thx

Still working on it. There’s a not yet published class-name.binding that doesn’t work yet…

It’s in the repository. https://github.com/krausest/js-framework-benchmark/tree/master/riot-v2.5.0

Maybe you can take a look whether I’ve done something wrong.

That version has a very poor performance. I’m not publishing any numbers until it’s been verified it’s not due to some mistake.

You’re welcome to make a pull-request ;-)

It’s already in the repository. First numbers look very promising, but it has a strange bug:

https://github.com/krausest/js-framework-benchmark/pull/11

Hi, Stefan! Great job!

Could you accept my pull request which upgrades vidom to 0.3.14 and rerun tests and then update your result table? I’ve found and fixed bottleneck in one of the tests (clear rows a 2nd time) which significantly penalizes overall result.

The Aurelia demo code is poorly written, not reflecting how a real developer would use the framework. We’ve submitted a PR which addresses the issues. We’ve also released some updates to the framework with a few performance improvements, thanks to these benchmarks. I’d love to see the chart updated to reflect the fixes since it significantly changes the results in our tests.

riotjs?

Already in the repository, but performance is not that good. Maybe you can check the code on https://github.com/krausest/js-framework-benchmark ?

Thanks Rob. Indeed the PR makes aurelia much faster. Results will be included in the next update to the benchmark (won’t take long). Other frameworks did also improve a lot (like vue 2 beta, vidom).

This is very, very nice, thanks! I want to move from Angular 1 to Aurelia, but that memory allocation table is scaring me…

I’d suggest you to give these frameworks some colors in the table. For example, Angular 1, Angular 2 and Aurelia fit into the same category as a “complete” framework. Inferno, React, Vidom, …, are just libraries to build UIs.

I googled to check if any other could take Angular’s place (besides Aurelia), just to find out most of them would just replace React.

Great work and effort.

This might be misleading.

The table should contain a paragraph noting about other factors, such as effort of code, development time, lines of code (payload) and non UI support from frameworks. This has deep impact on a project and speed/Memory is just one of the factors and most of the times they are less significant.

It should also distinguish between a framework and library. Angular/Aurelia are frameworks, React/Inferno are libraries, this has deep impact.

Examples:

A vanilla JS implementation is way harder/time consuming then using a framework. Way way more time consuming for the long term.

AngularJS for example, is a complete toolset which have a substantial impact.

Angular 2 is platform agnostic – also a great benefit in some scenarios.

It would be great to see the incremental dom results. Also can anybody tell me what makes inferno fast